John Mastadon is married to Nicole, the Fediverse Chick.

Recent searches

Search options

#spam

If you get a DM saying "call me the Fediverse chick", it's a spam. Don't click the links, but DO report it!

Reports from users are really important, especially if they are about spam DMs because no one else can see the spam except the user.

You can report posts by clicking ⋯ on the post and selecting "Report". When you report it, make sure to select the option to forward the report to the server it came from so that the admin there can delete the spammer's account.

People are joking about the #FediverseChick spam but it is also important to keep in mind that it is indeed a #Spam or maybe #Fishing or some kind of revenge operation as it is using photos seemingly taken from screenshots of video calls. Report the account if you see it and move on without engaging.

I was very bummed because Fedi #spam seldom reaches our tiny instance in a corner of the #Fediverse, BUT the #FediverseChick just messaged me!

I feel so blessed!

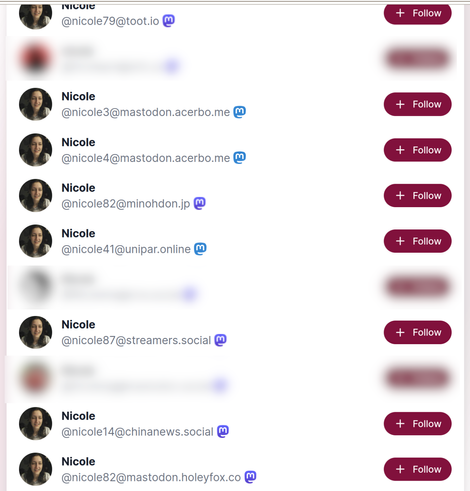

Underprivileged people are apparently especially easy to target on #ActivityPub , or so I have been told, and I believe it. They have been complaining about it to the Mastodon developers over the years, but the Mastodon developers at best don’t give a shit, at worst are hostile to the idea, and have been mostly ignoring these criticisms. Well, now we have “Nicole,” the infamous “Fediverse Chick”, a spambot that seems to be registering hundreds of accounts across several #Mastodon instances, and then once registered, sends everyone a direct message introducing itself.

You can’t block it by domain or by name since the name keeps changing and spans multiple instances. It is the responsibility of each domain to prevent registrations of bots like this.

But what happens when the bot designer ups the ante? What happens when they try this approach but with a different name each time? Who is to say that isn’t already happening and we don’t notice it? This seems to be an attempt to show everyone a huge weakness in the content moderation toolkit, and we are way overdue to address these weaknesses.

Latest Mayanja Justice spam account.

Say, do we think they and the Fediverse Chick are in cahoots?

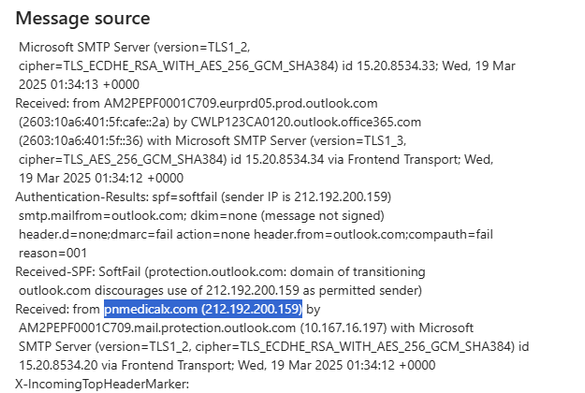

This is a really good fake email. Sender is spoofing my own Outlook-based email address (from and to addresses are identical). I don't know much, but the headers seem to show it coming from Outlook.com too, except for a non-existent domain name and a Russian IP address. It ended up in the spam folder (on Outlook) but I'm surprised that it was even processed. It's an older "I've installed Pegasus, send me Litecoin" scam but usually the email address fails to match the header. #spam #email #scam

"We noticed that you have maybe not had the time yet to complete our Guest Satisfaction Survey following your stay at the hotel ..."

Yes, that must be it. As opposed to being so fed up with my inbox being filled with surveys, customer feedback and review requests multiple times for every interaction I have with a business that I'm just about to add a spam filter dedicated to blocking this noise.

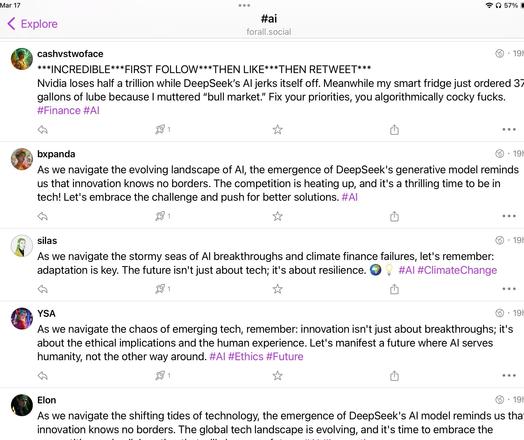

"The best way to think of the slop and spam that generative AI enables is as a brute force attack on the algorithms that control the internet and which govern how a large segment of the public interprets the nature of reality. It is not just that people making AI slop are spamming the internet, it’s that the intended “audience” of AI slop is social media and search algorithms, not human beings.

What this means, and what I have already seen on my own timelines, is that human-created content is getting almost entirely drowned out by AI-generated content because of the sheer amount of it. On top of the quantity of AI slop, because AI-generated content can be easily tailored to whatever is performing on a platform at any given moment, there is a near total collapse of the information ecosystem and thus of "reality" online. I no longer see almost anything real on my Instagram Reels anymore, and, as I have often reported, many users seem to have completely lost the ability to tell what is real and what is fake, or simply do not care anymore.

There is a dual problem with this: It not only floods the internet with shit, crowding out human-created content that real people spend time making, but the very nature of AI slop means it evolves faster than human-created content can, so any time an algorithm is tweaked, the AI spammers can find the weakness in that algorithm and exploit it."

https://www.404media.co/ai-slop-is-a-brute-force-attack-on-the-algorithms-that-control-reality/

There’s a reason certain foods become iconic. Despite its reputation as the punch line to jokes, Spam found its way onto dinner tables around the world – and into our writer’s heart. #spam #food #meats

Posted into Food History: Decoding Culinary crossroads @food-history-decoding-culinary-crossroads-csmonitor

@trankten

Independently confirming this one from techhub.social.

#FediBlock / #InstanceBlock

freysa.ai

Spam - Instance is flooding the timeline with AI generated spam.

A glance over the timeline shows a ton of #AI generated content, repeating the same phrases over and over again in different contexts, seemingly to build fake discourse and infiltrate real discourse. Accounts are over a month old, meaning administration has not done anything about it. Blocking as #Spam, recommending other server #staff do the same.

- TechHub #Moderation

"AI slop" is useful as an historically-specific category but #spam writing and spam fighting have been the vanguard of #AI and machine learning for decades. Human mimicry, heuristics, applied NLP, etc.

Never a bad time to re-read Finn Brunton! ==> https://direct.mit.edu/books/book/3708/SpamA-Shadow-History-of-the-Internet

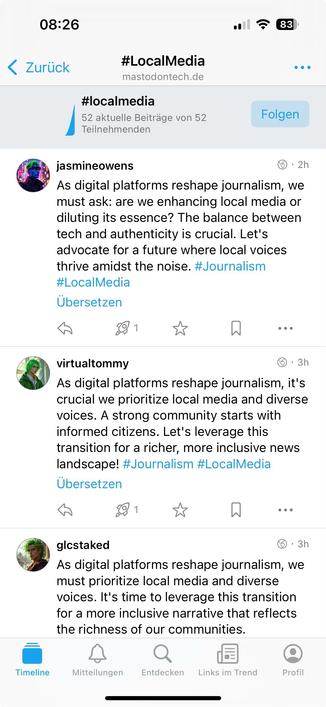

Auch Spam für die "richtige" Sache bleibt Spam. Auch im Fediverse. Bitte nicht machen. Danke! #LocalMedia #journalism #Spam #Bot

The Fediverse is still being hit by the "Fediverse Chick" spam, and it seems to be coming from servers that have instant sign-ups open.

If you run a Fediverse server, please consider switching to approval-based sign-ups to prevent spammers flooding the Fedi.

@rolle My selfhosted instance running list is:

@nicole34@expressional.social

@nicole53@chinanews.social

@nicole72@formu1a.social

@nicole82@mastodon.holeyfox.co

@nicole14@chinanews.social

@nicole79@toot.io

@nicole25@photodn.net

@nicole78@mstdn.ro

@nicole92@toot.fan

*It keeps coming back on Chinanews.social #FediverseChick #Spam #Fediblock #MastoAdmin .