Quick #accessibility tip for iPhone users. You can turn on a gesture to let iOS read your screen to you in Settings > Accessibility > Spoken Content

Then you can swipe down with two fingers to get a spoken version of your screen. Really handy to check how your post will be read for blind people.

#accessibility

Today's Web Design Update: https://groups.google.com/a/d.umn.edu/g/webdev/c/KLoXK3jgexg

Featuring @SteveFaulkner, @joedolson, @nathanwrigley, @aardrian, @TPGi, @a11ytalks, @rachelandrew, @heydon, @css, @Una, @leaverou, @pawelgrzybek, @brucelawson, @hdv, and more.

Subscribe info: https://www.d.umn.edu/itss/training/online/webdesign/webdev_listserv.html#subscribe

hey @FediTips

@mastodonmigration

@Mastodon

this post is about the hCaptcha accessibility cookies when bot checking at sign-ups

i don't know if mastodon has addressed this but i think it's something that needs to be spoken on more if this is still the case

spending 45 minutes to complete an inaccessible captcha is ridiculous and shouldn't be the case to begin with

Thanks, Jack White!

"On Sunday night, the Cactus Club launched a campaign with a video on social media, explaining that if all of their followers donated just one dollar, they would have more than enough funds to pay for the remainder of the construction. On Monday, they received a surprise donation from none other than Jack White, who kicked in the $20,000 necessary to fund the bump out extension."

https://onmilwaukee.com/articles/jack-white-cactus-club-donation

@IAmDannyBoling @georgetakei We need to have a bigger discussion about the emotional labor of this (and the etiquette of demanding it from others in public), only because it is 2025 in an era where automation for this is becoming a realistic expectation

It is time to get sanctimonious — not at all users — but at developers of screen readers (and client software, but those days are also drawing to a close), because #accessibility for all and #AltText for all should be an automatic privilege

This isn’t on the same level as hiding topics behind warning tags.

I am posting this due to the wonderful enthusiasm of the developer in regards to accessibility. When I asked if this app is accessible with Talkback, this was the response I received.

"Comment from Hakanft on R/Android

Thanks for asking — and that's a really important point!

The app was built using React Native (with Expo), so it should support basic TalkBack functionality out of the box. However, I haven’t fully optimized or tested it for screen reader accessibility yet.

Your question made me realize I need to improve this — and I will! If you're open to sharing feedback after trying it, I'd truly appreciate it."

The app itself is not only a great idea but can be extremely helpful. It is a fun way to gauge your water intake and ensure that you are drinking enough water each day. It also works with twenty-nine languages! Below is the full discussion on Reddit, ttcomplete with IOS and Android links.

https://www.reddit.com/r/Android/comments/1lzl46o/just_launched_my_free_multilingual_water_reminder/

Later today, I'll be trying out RIM with a friend. Let's see how it does! This will be immensely interesting.

Not everyone can access alt-text. Sighted people need a mouse/trackball/touchpad/trackpoint or a touch screen to access alt-text. And in order to operate that, they need at least one working hand. But not everyone has working hands. Just like not everyone can see, which is why you describe your images in the first place, right?

For those who can't access alt-text, any information only available in alt-text and neither in the post text nor in the image itself is inaccessible and lost. They can't open it, they can't read it.

Here are three relevant pages in my (very early WIP) wiki about image descriptions and alt-text:

- Can everyone access alt-text?

- Don't explain things or give other information only in alt-text!

- Don't use alt-text to write around your character limit!

#Long #LongPost #CWLong #CWLongPost #AltText #AltTextMeta #CWAltTextMeta #Disability #A11y #Accessibility

“Sassy Outwater-Wright: Accessibility Champion and More, Dies at 42” by @LFLegal:

https://www.lflegal.com/2025/07/sassy-outwater/

The “and more” is doing a lot of work there, because Sassy was so much more.

A great article on avoiding binary thinking in design, from @Cjforms.

"The world is analog and yes/no is a binary choice, so the two options rarely reflect the real world entirely accurately."

#OpenSource is a bag of worms when #Accessibility is ignored.

Free Software is not meant to exclude others.

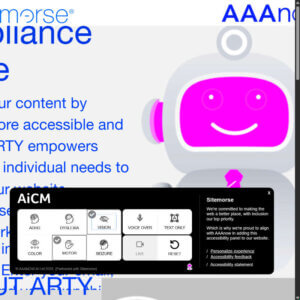

There is yet another accessibility overlay vendor out there, though it makes no conformance guarantees. Nor security or privacy guarantees.

“#ARTY Could Get You Sued”

https://adrianroselli.com/2025/07/arty-could-get-you-sued.html

Progress! Not a win yet.

“Due to the receipt of significant adverse comments […] the effective date of the direct final rule […] is delayed until September 12, 2025.”

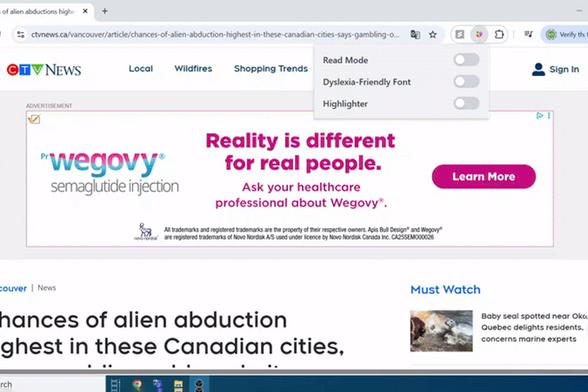

#Chrome #accessibility #neurodiversity

'Pop-up ads, hard-to-read fonts and robotic voices can overwhelm neurodivergent readers. LumiRead, a new Chrome extension built by a Northeastern computer science grad student, solves that.'

https://news.northeastern.edu/2025/07/09/chrome-accessibility-extension-lumiread/

a label and a name walk into a bar

"When is a label also an (accessible) name, when is it not and when is it neither?"

https://html5accessibility.com/stuff/2025/07/14/a-label-and-a-name-walk-into-a-bar/

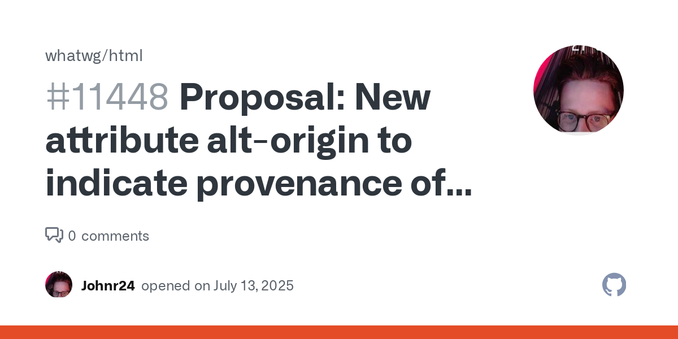

I've submitted a proposal to WHATWG about a provenance/origin classifier for alt text on images.

https://github.com/whatwg/html/issues/11448

The current HTML specification provides the alt attribute on images to supply alternative text for users who cannot see visual content. However, there is no way to indicate whether the alt text was authored by a human or generated automatically by a machine (e.g., via AI or computer vision).

Venting.

If I have to open the browser's dev tools, dig through the DOM to find where you've set the background colour of the page, and then change it manually, just to be able to read what you've written, you have failed utterly at web design, user experience, and accessibility simultaneously.

Yes, I'm looking at you, https://www.meditationsinanemergency.com/please-shout-fire-this-tis-burning/ .

How's the #MAC os betas currently, anything that is majorly broken, particularly where #voiceOver or app compatibility is concerned? #accessibility

Tuba: a desktop #fediverse client for #GNU #Linux Here's a nice review. https://news.itsfoss.com/tuba/ If you install #Debian (ver. 13), with #GNOMEDesktop it is already installed. Can be installed, with #APT commands, from the terminal. I like it, so far, but am looking for #GUI customizations. Glad to learn there's an #accessibility preferences panel.

Have you tried RIM? It's an accessible way to get technical support remotely. It's somewhat similar to JAWS tandem and NVDA remote, but has more features... It's currently free until July 18th, so if you've been wanting to try it out, now is the time!