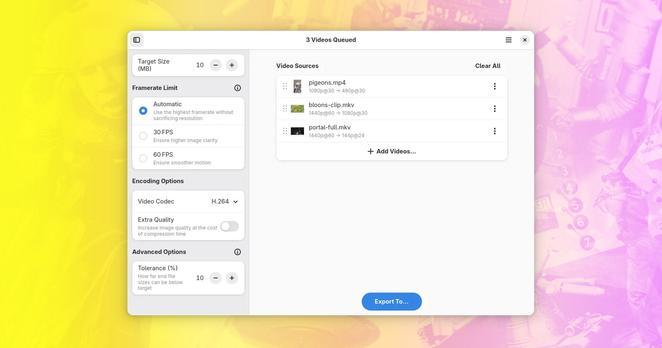

Want to compress a video to a specific file size? Constrict is a new Linux tool built in Python and GTK4, and powered by FFmpeg that can do it.

https://www.omgubuntu.co.uk/2025/07/constrict-linux-video-compressor-ffmpeg-gui-ubuntu

Want to compress a video to a specific file size? Constrict is a new Linux tool built in Python and GTK4, and powered by FFmpeg that can do it.

https://www.omgubuntu.co.uk/2025/07/constrict-linux-video-compressor-ffmpeg-gui-ubuntu

Playing with stereo pair labels in the waveform viz tool. So incredibly wild that #ffmpeg just lets me do all this

(short animation, no sound, ironically)

FFmpeg in plain English – LLM-assisted FFmpeg in the browser

Ok, any #video folks out there who know how to do what I want to do? I don't know what words to search for because I don't know what this technique is called. Boosts welcome, suggestions welcome.

I have a pool cleaning robot. Like a roomba, but for the bottom of the pool. We call it poomba. Anyways, I want to shoot an MP4 video with a stationary camera (a GoPro) looking down on the pool while the robot does its work. So I will have this overhead video of like 3-4 hours.

I want to kinda overlay all the frames of the video into a single picture. So the areas where the robot drove will be dark streaks (the robot is black and purple). And any area the robot didn't cover would show the white pool bottom. Areas the robot went over a lot would be darker. Areas it went rarely would be lighter.

I'm just super curious how much coverage I actually get. This thing isn't a roomba. It has no map and it definitely doesn't have an internet connection at the bottom of the pool. (Finally! A place they can't get AI, yet!) It's just using lidar, motion sensors, attitude sensors and some kind of randomizing algorithm.

I think of it like taking every frame of the video and compositing it down with like 0.001 transparency. By the end of the video the things that never changed (the pool itself) would be full brightness and clear. While the robot's paths would be faint, except where it repeated a lot, which would be darker.

I could probably rip it into individual frames using #ffmpeg and then do this compositing with #ImageMagick or something (I'm doing this on #Linux). But 24fps x 3600 seconds/hour x 3 hours == about 260K frames. My laptop will take ages to brute force this. Any more clever ways to do it?

If I knew what this technique/process was called, I'd search for it.

A developer managed to reverse pixelation in video using FFmpeg, GIMP and edge detection - no AI involved.

By analyzing motion and edges across frames, they could reconstruct original content from blurred areas.

It’s a reminder: pixelation is visual, not secure.

Code & demo: https://github.com/KoKuToru/de-pixelate_gaV-O6NPWrI

So I have hundreds of videos of ~1 minute recorded from my phone ~10 years ago, and they generally don’t have that great compression, nor they are stored in a modern and advanced video format.

For archiving purposes, I want to take advantage of my workstation’s mighty GPU to process them so that the quality is approximately the same, but the file size would be strongly reduced.

Nevertheless, compressing videos is terribly hard, and way more complex than compressing pictures, so I wouldn’t really know how to do this, what format to use, what codec, what bitrate, what parameters to keep an eye on, etc.

I don’t care if the compression takes a lot of time, I just want smaller but good looking videos.

Any tips? (Links to guides and tutorials are ok too)

Also, unfortunately I am forced to use Windows for this (don’t ask me why ), but I know nothing about Windows because I hate it. Practical software suggestions are very much welcome, too!

@WeirdWriter

Must do a search on how to use #FFmpeg with Linux!

Finally got #FFmpeg working as a fully functional screen recorder, and podcast recorder too! No more downloading third party tools that can all be done in FFmpeg. Now maybe I can make PeerTube videos easier now.

Speak faster… or give me tokens… nah, just speak faster.

#transcriptions #llm #gpt #openAI #ffmpeg #ffmpeg4live

https://george.mand.is/2025/06/openai-charges-by-the-minute-so-make-the-minutes-shorter/

Just a thought, from a knuckle-dragging biology scientist. TL;DR: I believe there is scope to make the hosting of a peertube instance even more lightweight in the future.

I read some time ago of people using #webAssembly to transcode video in a user's web-browser. https://blog.scottlogic.com/2020/11/23/ffmpeg-webassembly.html

Since then, I believe #WebGPU has done/is doing some clever things to improve the browser's access to the device's GPU.

I have not seen any #peertube capability that offloads video transcoding to the user in this way.

I imagine, though, that this would align well with peertube's agenda of lowering the bar to entry into web-video hosting, so I cannot help but think that this will come in time.

My own interest is seeing a #Piefed (activitypub) instance whose web-pages could #autotranslate posts into the user's own language using the user's own processing power... One day, maybe!

Thank you again for all your hard work; it is an inspiration.

Re: https://okla.social/@johnmoyer/114738149453494692

https://www.rsok.com/~jrm/2025May31_birds_and_cats/video_IMG_3666c_2.mp4

ffmpeg -loop 1 -i IMG_3666cs_gimp.JPG -y -filter_complex "[0]scale=1200:-2,setsar=1:1[out];[out]crop=1200:800[out];[out]scale=8000:-1,zoompan=z='zoom+0.005':x=iw/3.125-(iw/zoom/2):y=ih/2.4-(ih/zoom/2):d=3000:s=1200x800:fps=30[out]" -vcodec libx265 -map "[out]" -map 0:a? -pix_fmt yuv420p -r 30 -t 30 video_IMG_3666c_2.mp4

@snacks that's because #ffmpeg was built by more people and can utilize more acceleration options (from SSE-SSE4.2) beyond vendor- & generation-proprietary code (GPU-Encoders & Decoders expect to get a native bitstream fed like hardware in dedicaded CD-/DVD-/bluray players)

Not to mention big players from Alibaba to Zoom, from Amazon to Netflix and from Apple to Sony have a vested interest in it's performance, because bandwith, storage and computational power are expensive for them.

My "couldn't let it go" #ADHD project this week has been optimizing my faux #CUSeeMe webcam view for my #twitch streams and then making it more accurately emulate the original #ConnectixQuickCam:

https://github.com/morgant/CU-SeeMe-OpenBSD

Another project that has helped me better understand #ffmpeg & #lavfi, #mpv, and how to create complex filters.

@debby It really comes down to hardware now-a-days. Software like #Kdenlive #Krita #Flowblade #Shotcut #GMIC #Inkscape #Pencil2D #GIMP #Friction #Synfig #Glaxnimate #OBS #Blender #Handbrake #FFmpeg #Imagemagick and #Natron tend to offer some impressive options that allow users to achieve some impressive results.

Usually, I'm quite happy with #Linux:

It just works, and I don't have to think about it at all.

But sometimes, I reckon: If it sucks, it sucks badly.

I use the command line video editor #ffmpeg quite a lot, but yesterday, it just stopped working, first producing wrong results and then weird error messages.

Internet search for the error messages gave lots of results from the 2010s and Reddit threads where the issue remained unresolved.

"OK", I thought, "Let's do what everyone does":

1/3

While #ffmpeg can turn our "show" into a "podcast", it can’t turn our show into an enjoyable experience for our listeners.

Sorry about that.

Talk is cheap, send patches.

@jcrabapple I had a few stumbles with #JellyFin to start (around it needing a special - but sanctioned - build of #ffmpeg on my platform), but once I figured that out it has been smooth sailing and Im quite enjoying it. Although metadata success relies on proper media curation, but #Filebot has been super helpful there and worth its one-time cost.

If only there were #xbox and #ps5 clients for it; rn i use a low power linux box for playback on my TV.

3/3 - The Making Of

I kept getting #HTTP 413 "Payload Too Large" errors on my instance - it's not actually mine-mine, i'm just a guest here - layer8.space is run by @Sammy8806. (thanks, mate )

1st, there was 1080p60 with audio

then i trimmed and rendered it out as 720p30 with audio on the Galaxy S7

on the PC, using #ffmpeg, i've removed the audio and rendered it out at 15 fps

i've cropped it to 5 seconds

ffmpeg -i src.mp4 -an -c:v libx264 -vf "fps=15" -t 5 out-15fps-noaudio-5s.mp4